I synthesized nine Wikipedia articles into a new article dedicated to BASIC interpreters:

A BASIC interpreter enables users to enter and run BASIC programs and was, for the first part of the microcomputer era, the default application that computers would launch. Users were expected to use the BASIC interpreter to enter in programs (often from printed listings) or to load programs from storage (often cassette tapes).

Why do I think a new article is warranted, rather than just referring to the BASIC and Interpreter articles? As I write in the article:

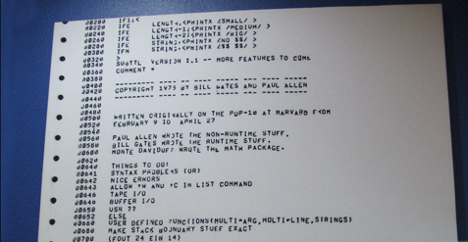

BASIC interpreters are of historical importance. Microsoft’s first product for sale was a BASIC interpreter (Altair BASIC), which paved the way for the company’s success. Before Altair BASIC, microcomputers were sold as kits that needed to be programmed in machine code (for instance, the Apple I); after the MITS Altair 8800, microcomputers were expected to ship with BASIC interpreters of their own (e.g., the Apple II, which had multiple implementations of BASIC). A backlash against the price of Microsoft’s Altair BASIC also led to early collaborative software development for Tiny BASIC implementations in general and Palo Alto Tiny BASIC specifically.

As software for microcomputers developed, you could use the interpreter just to CLOAD and RUN assembly language programs. Those might call BASIC ROM routines (say, for converting ASCII input to binary or vice versa). So BASIC interpreters were a proto-operating system.

BASIC interpreters were the command line, the primary UI, for tens of millions of microcomputer users. They powered hundreds of BASIC dialects and had specific techniques tailored to the language and early computers that differ from modern implementations of interpreters. An article on a specific BASIC won’t compare and contrast techniques. The synthesis is to start the article; I’ll add additional content over time.

Some of the content I added to my synthesis:

- Higher level programming languages on systems with extensive RAM have simplified implementing BASIC interpreters. For instance, line management is simple if your implementation language supports sparse matrices, variable management is simple with associative arrays, and program execution is easy with eval functions. As a result, coding BASIC interpreters has become part of the retrocomputing hobby.

- Language design for the first interpreters often simply involved referencing other implementations.

- Early microcomputers lacked development tools, and programmers either developed their code on minicomputers or by hand.

- Some BASIC interpreters were coded in the intermediate representation of a virtual machine instead of assembler, to add a layer of abstraction and conciseness above native machine language. (Integer BASIC’s ROM had a virtual machine, but it wasn’t used by the BASIC implementation.) [I had to correct this misunderstanding in the SWEET16 entry.]

- To save RAM, and speed execution, all BASIC interpreters would encode some ASCII characters of lines into other representations. For instance, line numbers were converted into integers stored as bytes or words, and keywords might be assigned single-byte values or “tokens” (for instance, storing PRINT as the byte value 145, in MS-BASIC). These representations would then be converted back to readable text when LISTing the program.

- Some systems, such as the Bally Astrocade and Sinclair systems, basically had the user do the tokenization by providing combined keystrokes to enter reserved words.

- Most of the memory used by BASIC interpreters was to store the program listing itself. In Tiny BASIC and Altair BASIC, the interpreter would search its memory, a line at a time. Lines were stored in a block of memory and were delimited by carriage returns, followed by the line number in two bytes. While the new line number was higher than the current line number, the pointer would be advanced to the next carriage return, to find the next line. If it were lower than the new line number, the later lines would be moved in memory to make room for the space required for the new line. If it were the same line number, and not the exact same length, subsequent lines would need to be moved forward or backward. Implementations such as this affected performance. A GOTO or GOSUB to a later line would take longer, as the program would need to iterate over all the lines to find the target line number. In Atari BASIC, the line length was recorded, so that the program did not have to scan each character of the line to find the next carriage return.

- With the exception of arrays and (in some implementations) strings, and unlike Pascal and other more structured programming languages, BASIC does not require a variable to be declared before it is referenced. Values typically default to 0 (of the appropriate precision) or the null string.

- Mathematical functions were sparse in Tiny BASIC implementations and Integer BASIC. In fact, the Design Note for Tiny BASIC requested only a RND random-number function, though Palo Alto Tiny BASIC added an ABS absolute value function and SIZE, to determine the remaining available RAM.

- Tiny BASIC implementations typically lacked string handling. Palo Alto Tiny BASIC would allow users to enter mathematical expressions as the answer to INPUT statements; by setting variables, such as Y=1 and N=0, the user could answer “Y” or “1” at a yes/no prompt.

- The range of design decisions that went into programming a BASIC interpreter were often revealed through performance differences.

Anyway, I’d love you to review the article and let me know where it is unclear or add to it if you’ve ever used BASIC interpreters in the past. Many thanks!